How to scale Node.js applications?

To understand the scaling of Node.js applications, we first need to understand what problem scaling is solving.

You know that Node.js is single-threaded, it has its main thread. So instead of serving every incoming request to the server on a separate thread, it serves every request through the main thread. I will explain this in more detail as it can be a bit confusing.

So if the request blocks the main thread it can affect other incoming requests. Let's say you have a simple route /user-profile and it takes 1 second to complete the request. So if 3 /user-profile requests are made at the same time this will happen:

- one of the requests will be completed in 1 second,

- another in 2 seconds (because it needs to wait 1 second)

- and the third one in 3 seconds (waiting for 2 seconds for the previous 2 requests to complete)

However, Node.js can offload many tasks to the operating system kernel. The operating system kernel is multithreaded. Here are some examples of these tasks:

1fs.readFile()dns.lookup()zlib.gzip()crypto.pbkdf2()

The first 2 tasks are very input/output intensive, and the last 2 are CPU intensive.

So when the operating system is executing tasks, the Node.js main thread can switch to complete other requests. The operating system enables Node.js to be reasonably fast with its single main thread model.

The problem happens when Node.js has too many tasks that can't be delegated to the operating system kernel. Then those tasks block the main Node.js thread because requests start to queue up.

This can result in an ugly scenario like this:

- arriving request may have to wait for a busy Node.js server to pick up and handle the request

- the longer the server is blocked, the longer the waiting time

- the higher number of requests even longer the waiting time

Since Node.js serves all requests through a single main thread, additional requests can not be served simply by adding more CPUs. A single thread can not run on more than 1 CPU core at a time.

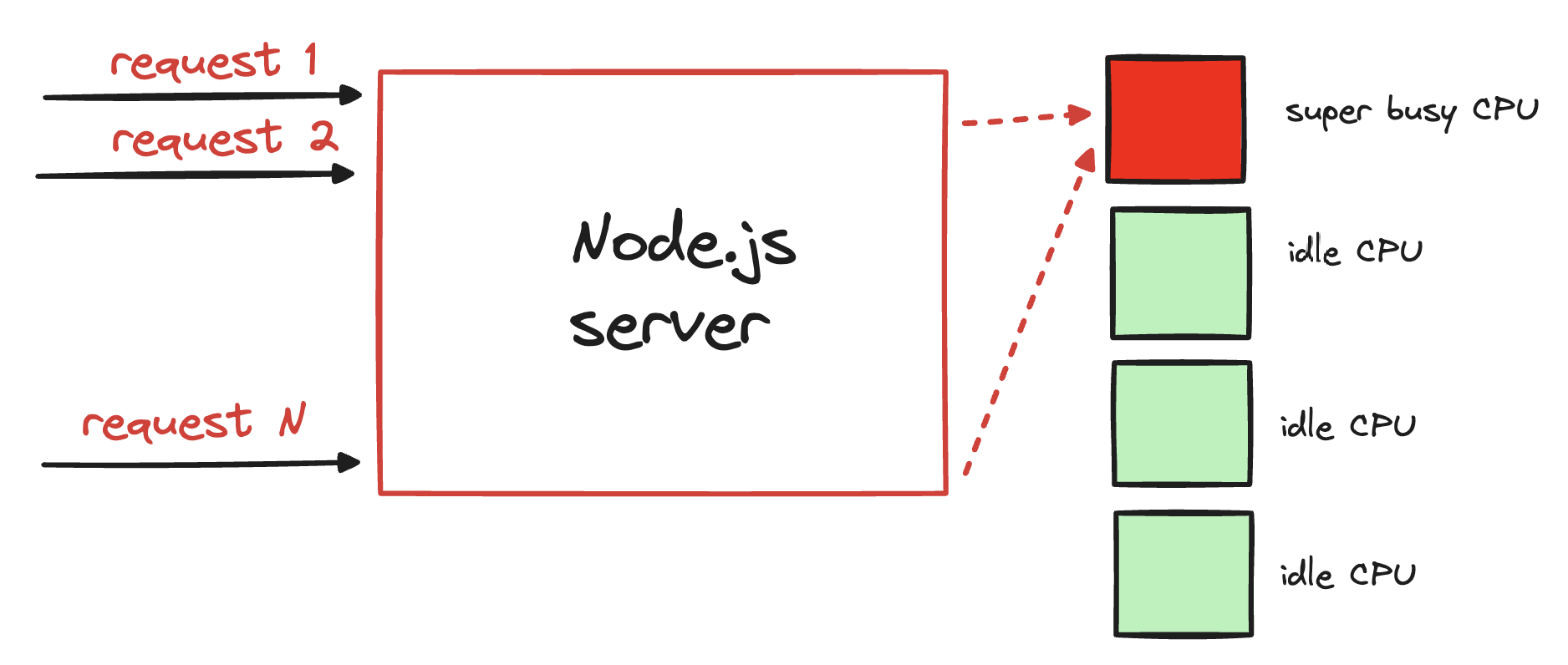

Here is how this scenario looks:

Requests are waiting and busy Node.js process trying to complete as many tasks as it can. There we have one CPU that is on fire while others are chillin'.

This is the scalability issue. When adding more hardware doesn't solve performance problems.

Solution 1 - worker threads

We can leverage the worker threads to execute more CPU-intensive JavaScript code and free up the Node.js main thread.

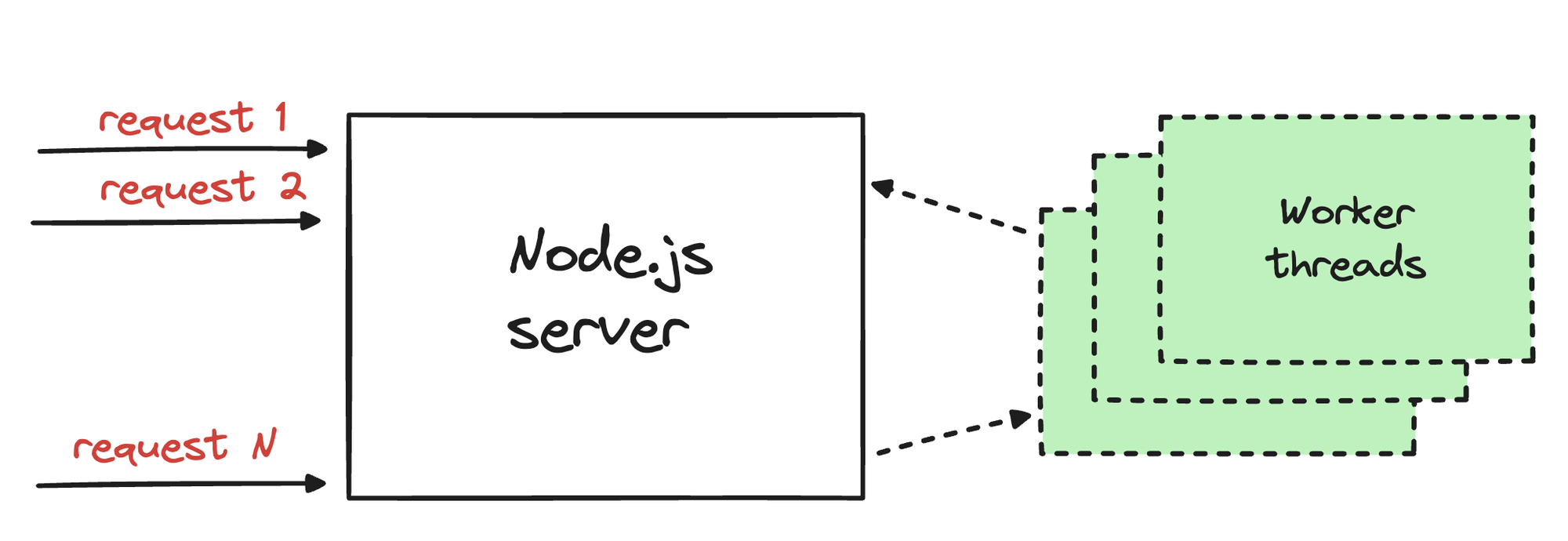

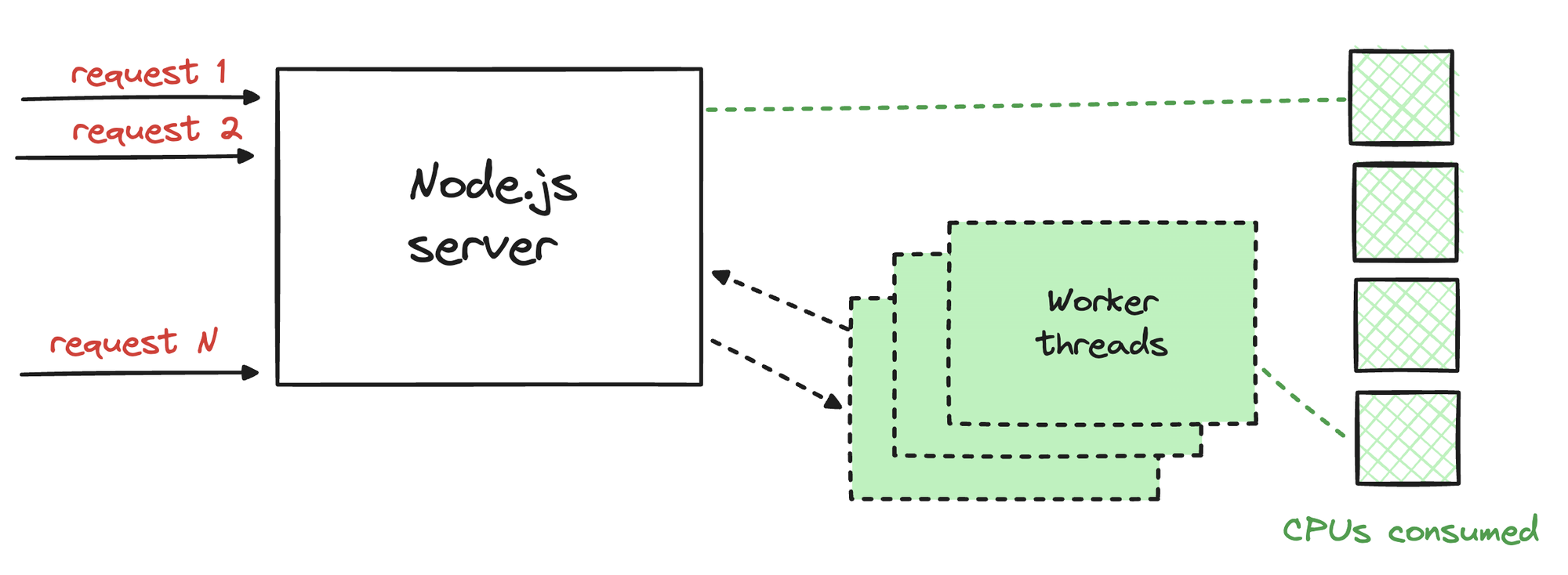

Here is how it looks:

So every request is still handled by the main Node.js thread but intensive JavaScript code is executed within worker threads. Those worker threads report on completion to their boss, the main Node.js thread.

The tricky part with worker threads and using them efficiently is to identify and isolate intensive parts of the code. Which can be hard depending on how complex is your application's business logic. The Node.js worker_threads module must execute those intensive code parts.

Here is how it looks with worker threads:

With CPU-intensive JavaScript code running on workers, the Node.js process can use additional CPU cores. Also, the main thread is freed up to serve more requests.

This way is known as vertical scaling. Vertical scaling means scaling by adding more resources to the server.

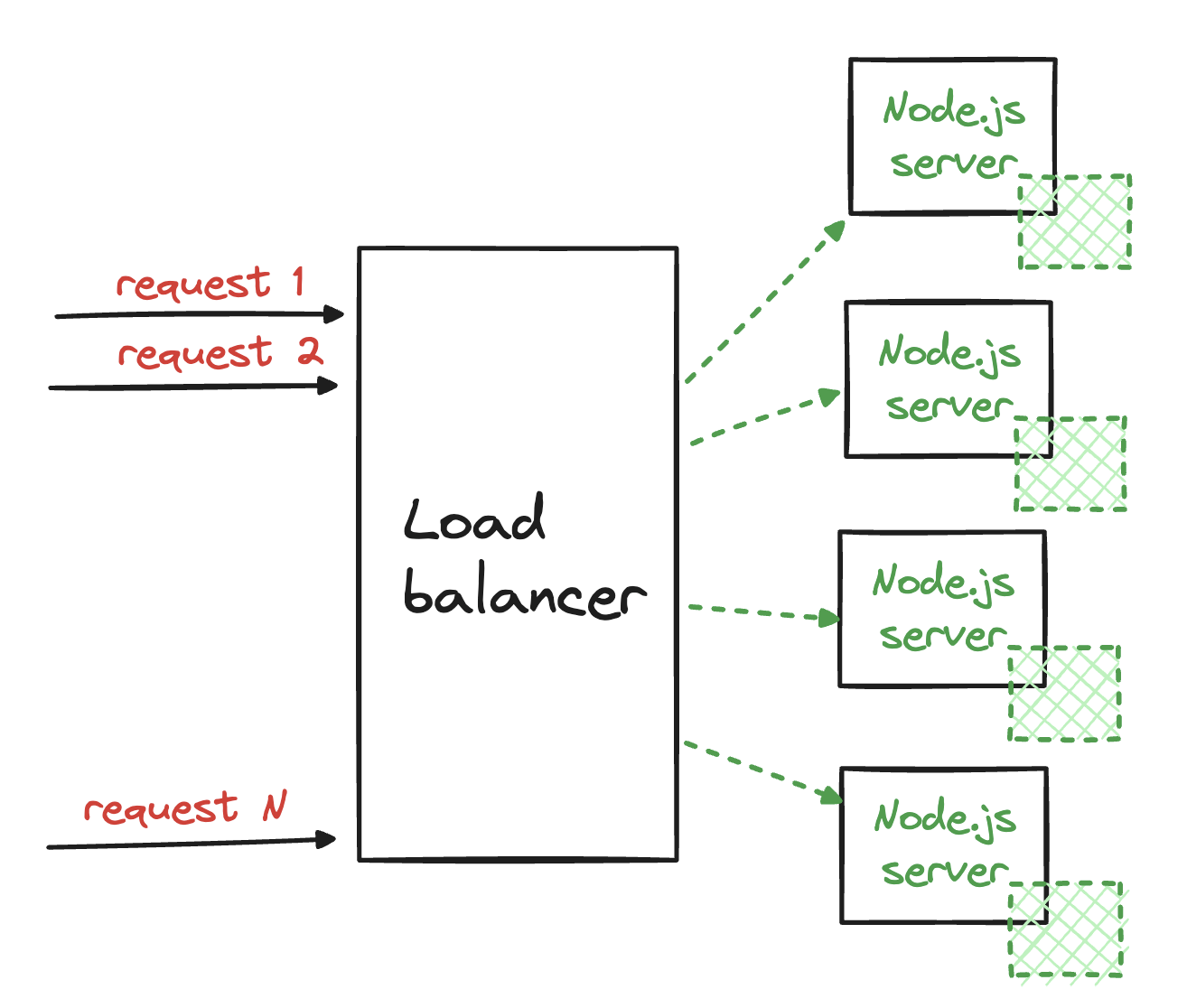

Solution 2 - multiple Node.js instances

We can run multiple instances of our Node.js server process to serve the requests. The load balancer can distribute those incoming requests between the Node.js processes:

This approach can be more convenient as it doesn't require steps like identifying CPU-intensive JavaScript code, thread communication, etc. With this approach, we utilize all the available CPU cores.

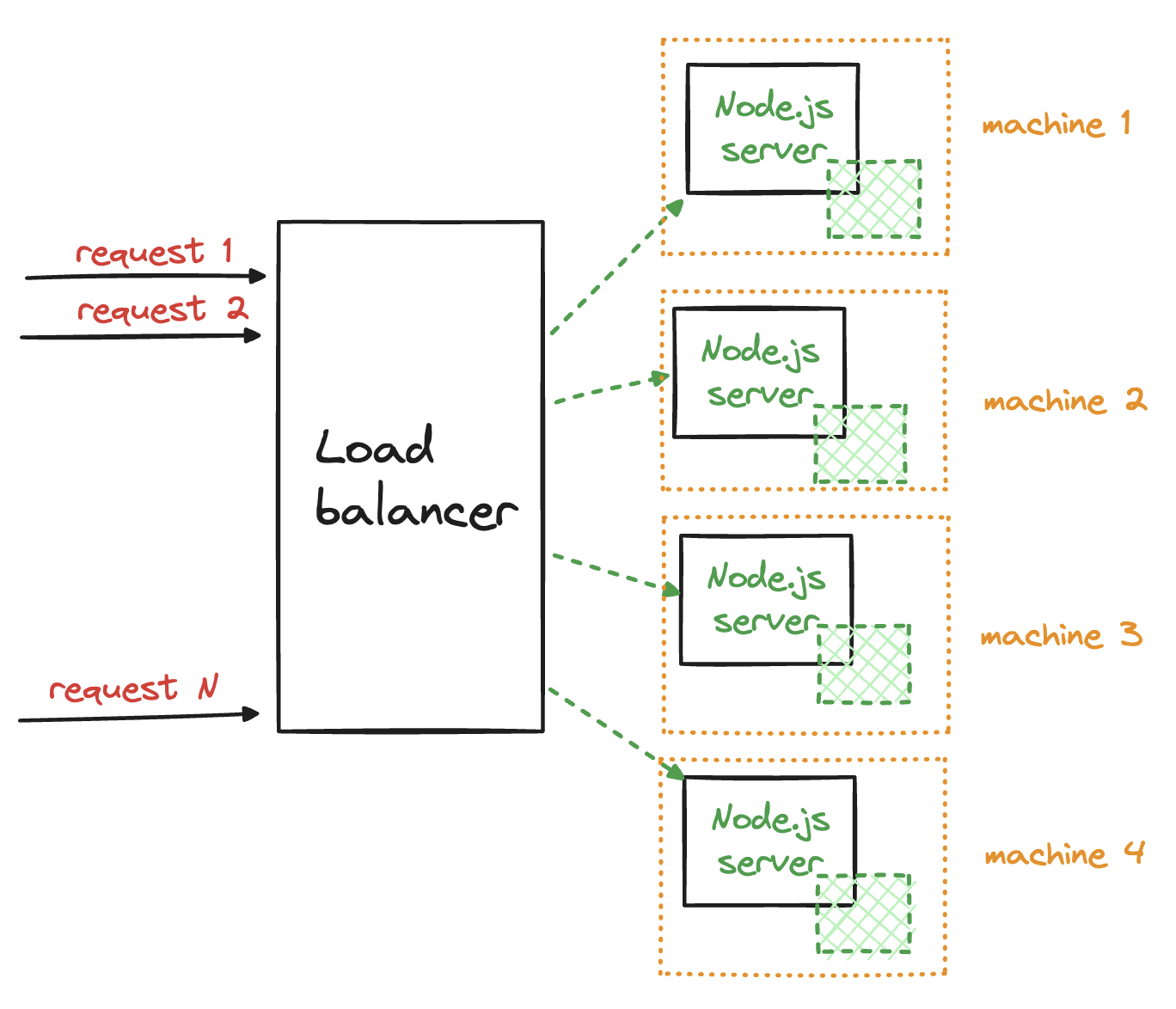

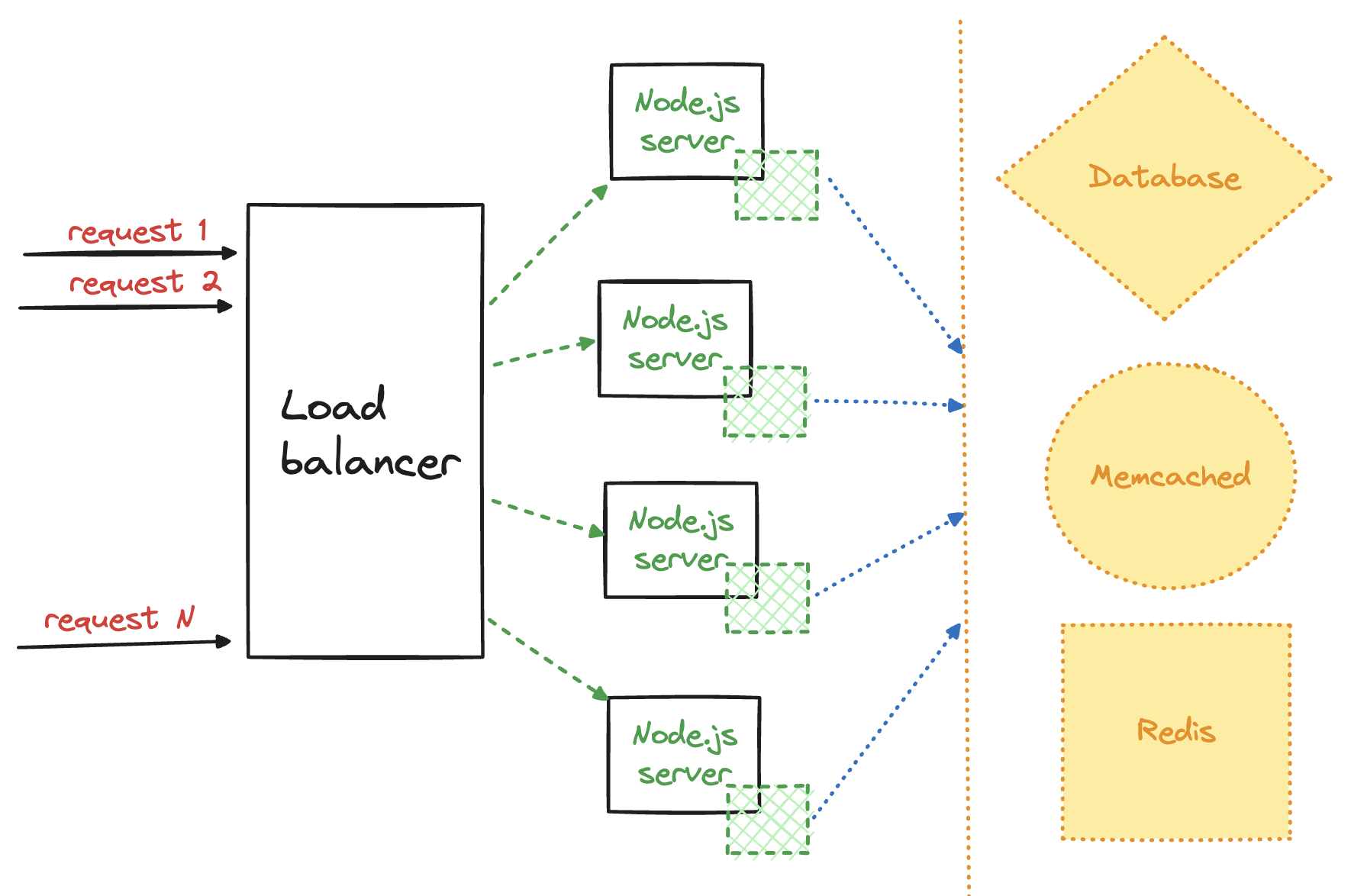

Another variant of this approach is to use different machines/computers to run the Node.js processes:

The load balancer distributes incoming requests across Node.js processes run on different machines in this variant. If the load increases, more machines can be added to increase capacity.

This way is known as horizontal scaling. Horizontal scaling means scaling by adding more nodes/servers to the setup.

This horizontal scaling setup can be built cost-effectively in the cloud (Amazon Web Services, Google Cloud Platform, Microsoft Azure, etc).

In the cloud, a server machine is a virtual machine that can be quickly added or removed with a single command. We can make a cost-effective setup by deploying the low-capacity virtual machine that can be added/removed depending on the requests load.

An important thing to remember when scaling Node.js applications this way is to respect one rule: The Node.js process serving a request should not store anything session-specific in its memory.

Breaking this rule will require consecutive requests from that session to be served by the same Node.js server instance. So even if that instance is super busy and others are free, it still must serve that specific request.

To prevent this, Node processes should store session-specific data in the store accessible to every other Node process in the setup:

This way, any Node server instance can serve consecutive requests from any session by looking up that session data from the common store. A database like Postgres or MySQL or an in-memory store like Memcached or Redis can store session-specific data.

Managing scalable setup

When you have a scalable Node.js application setup, you need management tools for that setup. From my experience, these 3 tools are the most popular and time-proven:

There is no silver bullet. Any of these can be used depending on your setup.

Nginx with systemd is ideal when DevOps automation and horizontally scalable setup are not needed. Nginx can take the role of load balancer and distribute incoming requests. Systemd can manage Node.js processes (eg. restart on crash) and take care of logs with Syslog.

The pm2 is ideal when you are looking for a Node-based solution and you don't need DevOps automation and a horizontally scalable setup. It can do load balancing with its cluster module. It can also start, stop, and restart Node processes while managing logs.

Docker is best suited when you need DevOps automation and a horizontally scalable setup. It also works excellently in combination with cloud services. Docker can be used to manage Node.js processes running within Docker containers. Cloud services can provide a way to add/remove virtual machines with a single command. DevOps automation can automatically start/stop virtual machines based on the requests load.

Each virtual machine runs a Docker container within Node.js instances live. In this case load balancer can be a cloud-based management service, like AWS Application Load Balancer.

Node task and log management is done via Docker container services. DevOps automation creates virtual machines from Docker images and updates the load balancer with changes.

Verdict

There is no magic formula or best recipe to manage the scaling of Node.js applications.

If you are out of ideas, you can use some of the tools described above. These tools passed the time test, but don't hesitate to manage your setup on your own rules.

If you have a small Node.js application you don't need to complicate things, you don't have to have full automation and tons of Docker containers. On the other hand, if you have a complex application with a lot of services, consider virtual machines and containers in combination with other options.

Comments ()